UXAI: Bridging ML Complexity and User Trust

Making AI explainability accessible to the people who can advocate for it most: designers and product teams.

Context

UC Berkeley School of Information

MIMS Program graduation capstone

Role

Product Designer

in a group of four

Timeline

December 2019 – May 2020

Overview

A foundational resource for product teams new to AI, built during graduate school, still serving designers five years later.

UXAI is an explainable AI framework for early-career professionals, resource-constrained teams, and students. It's not expert-level material for seasoned AI practitioners. It's a starting point for those building their first AI products and learning to advocate for transparency.

I led the website design and helped conduct industry research with practitioners at Google and IBM. Our team also produced academic research on trust calibration between AI systems and different user types.

Note

This project predates LLMs and generative AI. The framework hasn't been updated since May 2020, yet it continues to draw ~1,000 monthly visitors as an educational resource.

The Challenge

Explainability knowledge was trapped in academic papers and ML research.

In 2019, "Explainable AI" was a growing field, but most resources targeted researchers and engineers. Product teams building AI features had few practical frameworks for thinking about when and how to make AI decisions transparent to users.

This gap hit hardest for teams without dedicated ML expertise: early-stage startups, small product teams, and students learning to design for AI.

Goal

Make explainability actionable for product teams just starting out, not just those with ML resources and expertise.

Research

Learning from academic literature and industry practitioners.

Our team conducted research across two tracks: academic literature review on trust calibration between AI and users, and practitioner interviews at Google, IBM, and other organizations building AI products.

Insight

Explainability was rarely prioritized early in product development. Designers and PMs were often unaware of what was technically feasible or why it mattered. Smaller teams without AI specialists felt this gap most.

Relevant industry resources at the time

The Gap

The people best positioned to advocate for user-facing explainability (designers and PMs) lacked the frameworks to do so. The people with explainability expertise (ML researchers) weren't involved in product decisions. This problem was amplified for smaller teams without access to AI specialists.

How might we equip product teams to advocate for explainability without requiring ML expertise or dedicated AI resources?

Design Principles

The "Simplicity Cycle"

Meet teams where they are

Don't assume ML knowledge. Translate academic concepts into product language accessible to those just starting out.

Make it actionable, not just informational

Provide tools for real design workflows, not just reference material that requires expertise to apply.

Position designers as advocates

Frame explainability as a product decision, not just a technical one, so teams without ML specialists can still prioritize it.

Results

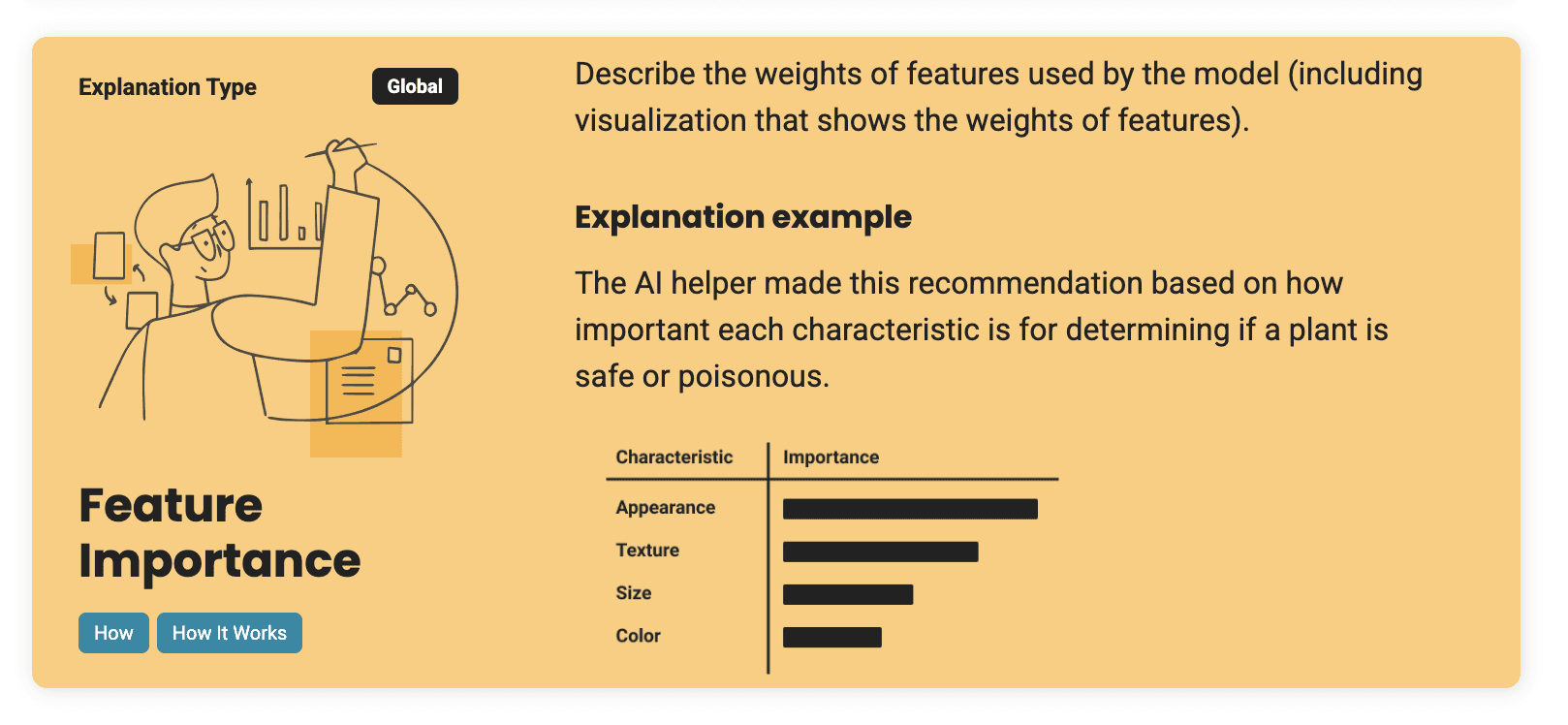

Explainable AI Framework

When and why explainability matters.

A structured approach to understanding when explainability matters and what forms it can take. Written for product teams without ML backgrounds, designed to be referenced during product development.

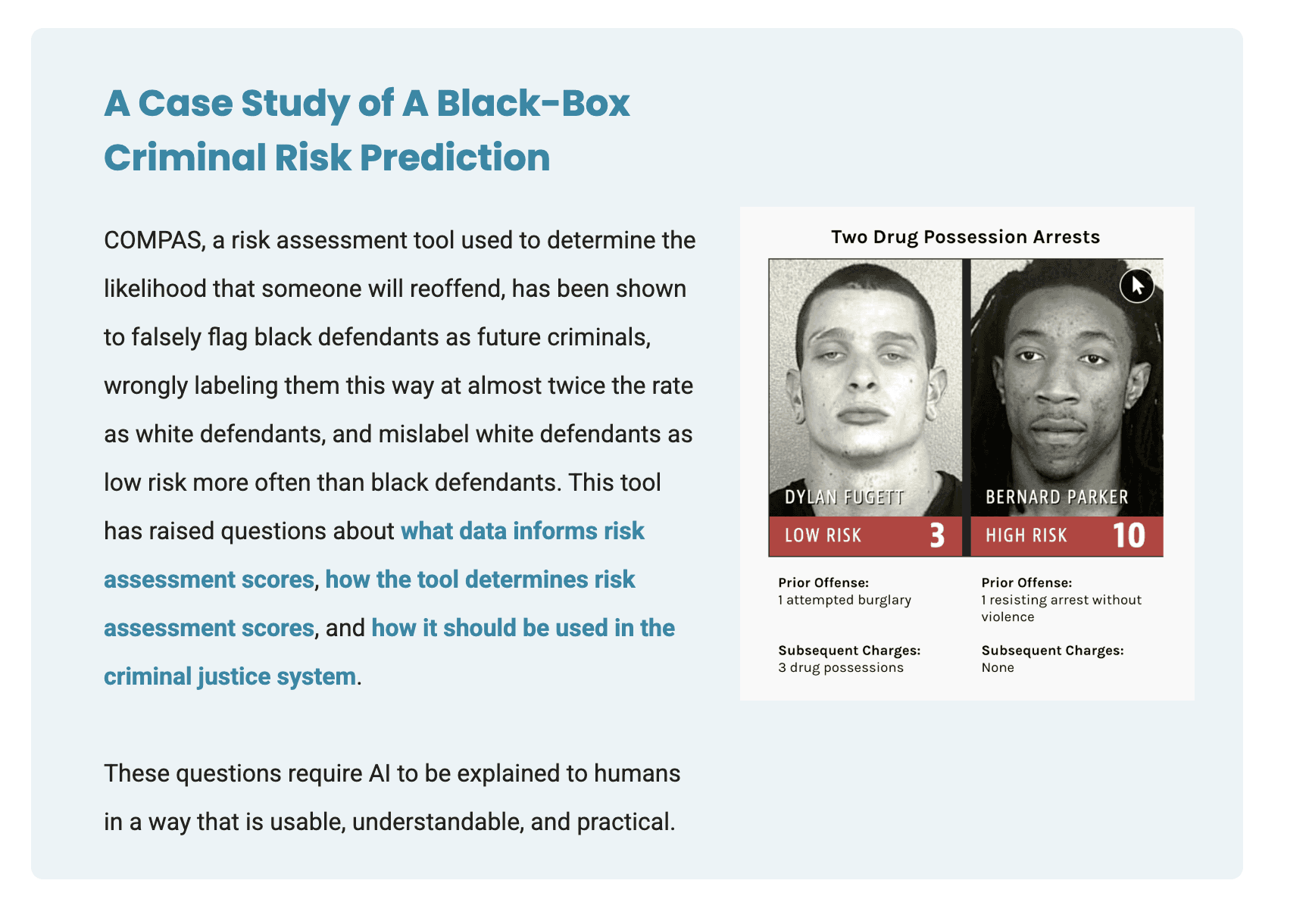

Example to advocate for explainable AI

Design Strategy Guide

Positioning designers as explainability advocates

Practical guidance on integrating transparency into product decisions. Aimed at helping designers and PMs make the case for explainability even when their teams lack dedicated AI expertise.

Strategy for "Who are your users and why do they need an explanation?"

Strategy for "When do users need an explanation?"

Brainstorming Toolkit

Cards for AI design sessions.

Card-based tools for product teams to use during design sessions. Prompts and frameworks for thinking through AI transparency without needing ML expertise. Low barrier to entry for teams new to AI product development.