Role

Staff Product Designer

Responsibilities

UX Research, Usability Testing, Wireframing, Prototyping, Visual Design

Duration

June, 2024 - August 2024

Overview

Designing RAG for claim-by-claim trust

RAG-V is a reference pattern that integrates retrieval-augmented generation (RAG) with a verification layer. In collaboration with researchers, I led the design of a workflow that verifies each claim in an AI-generated summary against source material. The goal was to ensure accuracy, reduce hallucinations, and build user trust, especially for national security users who have very low tolerance for errors.

The Problem

High-stakes users require zero hallucinations

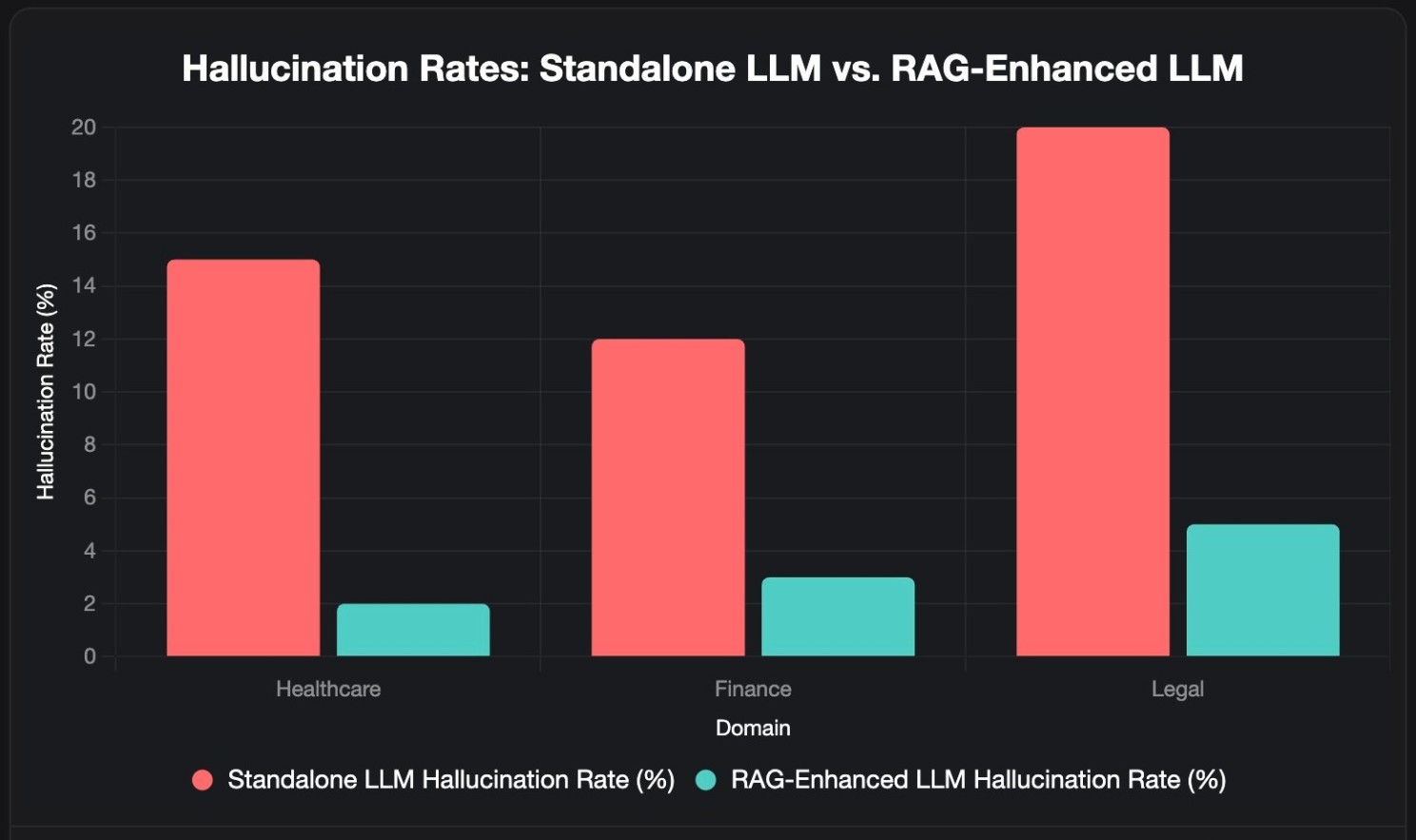

Traditional summary workflows offered only sentence-level transparency, showing whether a sentence was grounded in evidence but not which specific claims were supported. Even with RAG, AI-generated summaries still carried a 5% to 10% error rate. For users in high-stakes environments such as intelligence analysts, even minor hallucinations could have critical consequences.

Note: Data is illustrative, based on trends from Ke et al. (2025).

Ke, Y. H., Jin, L., Elangovan, K., Abdullah, H. R., Liu, N., Sia, A. T. H., Soh, C. R., Tung, J. Y. M., Ong, J. C. L., Kuo, C.F., Wu, S.C., Kovacheva, V. P., & Ting, D. S. W. (2025, April 5). Retrieval augmented generation for 10 large language models and its generalizability in assessing medical fitness. Nature. https://www.nature.com/articles/s41746-025-01519-z

Research Insights

Learning from existing citation and verification behaviors

Understanding how different groups handle evidence and attribution helped shape a design pattern that feels both familiar and trustworthy to users.

Preliminary Research Insight

Academic citation patterns

Use footnotes or inline citations to ground claims, establishing a trusted pattern for linking evidence.

Citation example from Citation Machine

Preliminary Research Insight

Intel analyst workflows

Rely on similar citations but emphasize excerpts and metadata such as organization, author, and timestamp to validate and share information.

Preliminary Research Insight

LLM product conventions

ChatGPT, Gemini, Claude, and Perplexity use hoverable chips for citations, with Gemini also offering on-demand verification. These approaches verify at the sentence level, which risks hallucination and makes it unclear which claim is supported.

Reference pattern from Perplexity

On-demand verification from Gemini

Proposed Solutions

Technical proposal

1. Breaks down generated text into individual claim

2. Cross-checks each claim against retrieved sources

3. Iteratively regenerates summaries until claims are verified.

Visit Primer's Research to read more details

RAG-V pipeline illustration

Design Principles

Designing for scannability, transparency, and control

This workflow reduced hallucination rates and improved review efficiency by embedding verification directly into the analyst’s workflow.

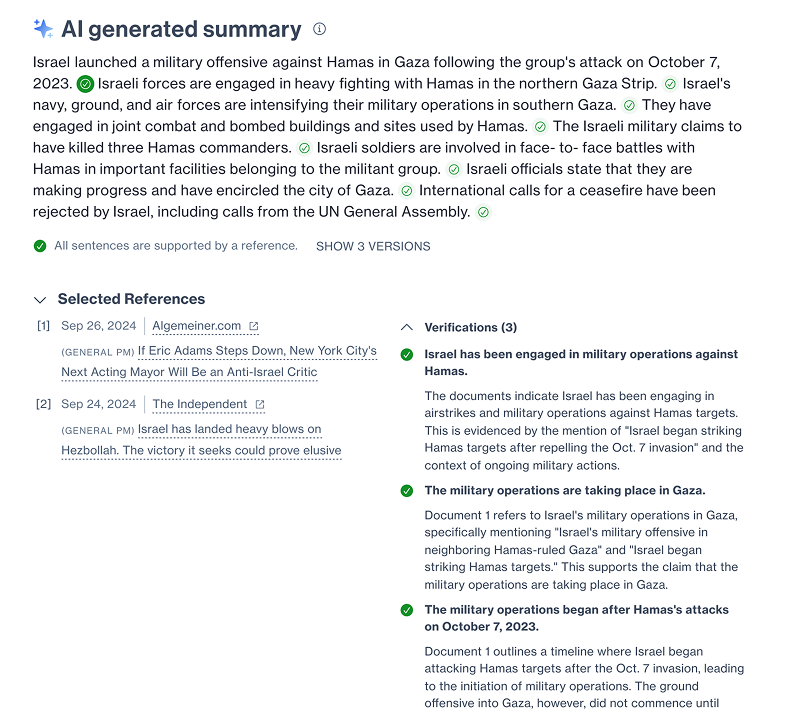

Highlighted view of verified versus unverifiable claims

Provides analysts with a quick, scannable overview of the verification status. Visual indicators guide them to the most critical parts of the summary first.

Verification status as citation chip for scannability

Side-by-side review of claims with referenced documents

Displays each claim alongside its reasoning and the original evidence, allowing users to confirm accuracy within the context of existing document viewers in their secure on-prem environment.

Click to read source materials and verification details

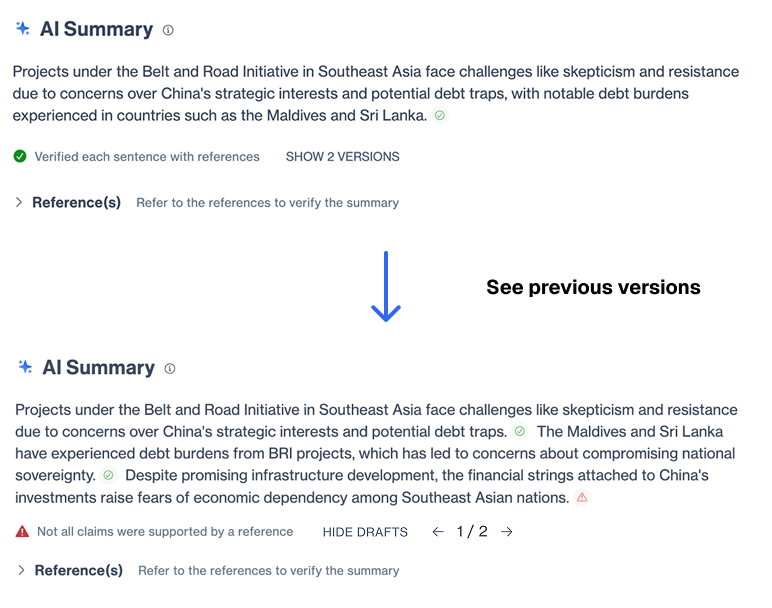

Option to view multiple drafts of summaries

Gives analysts transparency into different versions of the summary. They can review how content changed across verification passes and recover valuable insights that might have been removed during regeneration.

Review generation iteration through multiple drafts

Final Results

RAG-V in action

Integrating tabs to track multiple follow-ups

The final RAG-V workflow distilled three core design principles: efficiency, granularity, and transparency. Through iteration, these principles shaped claim-level verification, clear evidence views, and reduced latency, resulting in a system that not only minimized hallucinations but also fit seamlessly into analysts’ review processes, driving adoption and trust.

Trust became the new baseline

RAG-V achieved a significant reduction in unverified content and increased analyst confidence in generative features. Customers requested its extension beyond search summaries, and it became a baseline explainability pattern across Primer products.

"This approach gives me much greater confidence in the accuracy of summaries! When can I expect to see RAG-V applied to other generative text beyond search summaries?"

— One customer after a RAG-V demo

Retrospective

Verification builds trust, but speed builds value

RAG-V demonstrated the value of granular claim-level verification in building trust with generative AI. The tradeoff was speed and content richness, two factors that limited the perceived value of summaries. Future iterations will focus on balancing trustworthiness with efficiency and utility, ensuring analysts gain both confidence and insight from AI outputs.